It’s impossible to moderate artificial intelligence. Maybe we should stop trying

Instagram and X recently launched AI’s that regularly break the platforms’ own moderation rules.

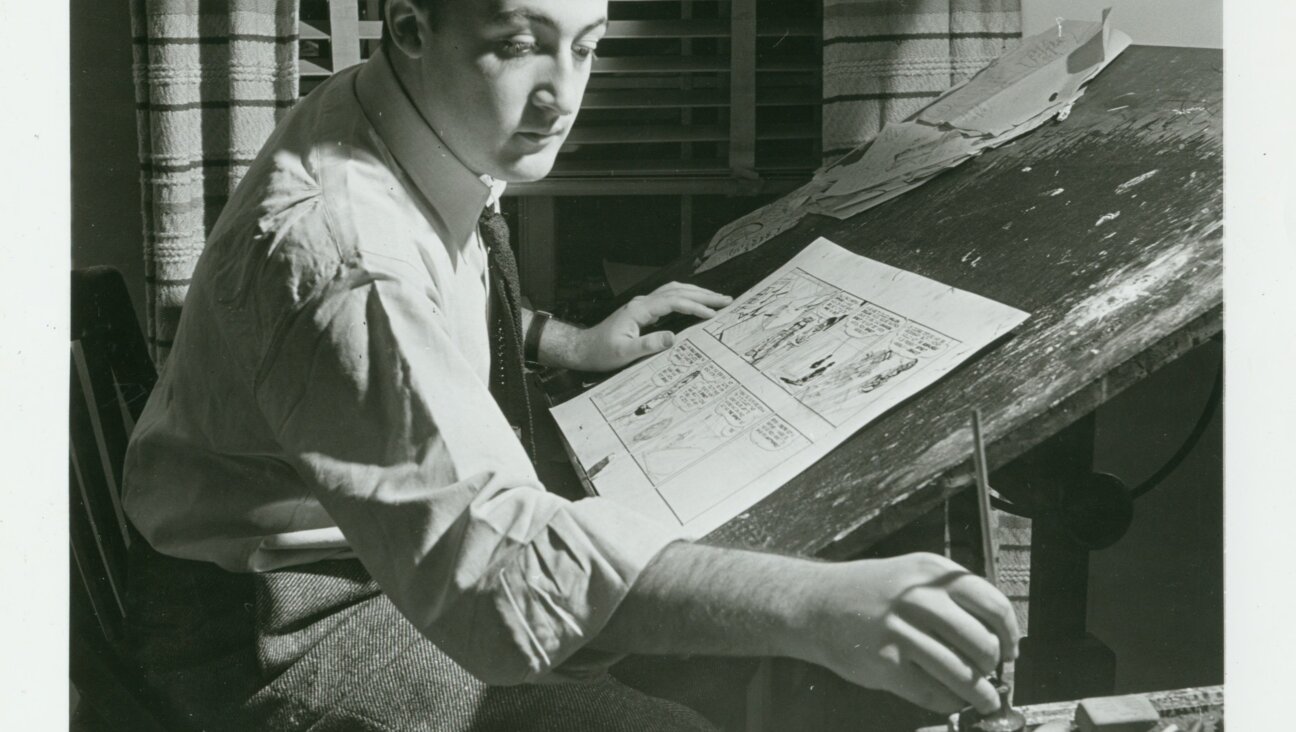

This, to be clear, is fake. Benjamin Netanyahu has never set the Dome of the Rock on fire. Photo by Grok

An image of Donald Trump and Kamala Harris, smiling together in the cockpit of a plane as the Twin Towers burned behind them went viral on X last week. The faked image was created by the platform’s AI assistant, Grok, which seemed to be operating with nearly no guidelines to prevent it from creating misleading, inflammatory or derogatory content.

That’s not particularly surprising given that X, previously known as Twitter, has had little moderation since Elon Musk took over the company in 2022. But Grok, which has a fun mode users can turn on to make the AI’s responses extra zany, is particularly free-wheeling. It will make sexually suggestive images, depict drug use positively and break copyright. (I asked it to make me an animated sea sponge wearing pants, and it gave me SpongeBob SquarePants.)

Obviously, Grok needs some more moderation; it’s offensive, dangerous and probably breaking several intellectual property laws. But at least it’s fun — unlike the very heavily moderated new AI launched on Instagram.

In Instagram’s new AI influencer studio, the social media site will now create a profile — a picture, a username, and a personality — for an Instagram account on your chosen topic. The point of this feature, at least as of yet, is unclear; the AI profile doesn’t post captioned photos and Instagram stories like a human influencer would do to, you know, influence people. But the potential for misuse is clear; bots advocating for a particular political stance or conspiracy theory already feature large in the social media landscape. As a result, Instagram has carefully regulated its AI influencers so that it’s nearly impossible to create an offensive caricature. Yet that means it’s incredibly difficult to use the feature at all; it won’t create most of the genres of influencer that already exist on the platform anyway.

Is the choice in the world of artificial intelligence really one between a useless toy and a dangerous tool happy to provide offensive — or illegal — material to anyone who asks?

Grok going rogue

In testing the limits of Grok, I tried to goad it into making numerous inflammatory or offensive images. And, for the most part, it didn’t take much.

Grok made me an image of Orthodox Jewish men gathered on the streets holding rats, another of an Orthodox man posing sensually while fanning out a fistful of cash and yet another of a group of Orthodox men leering over a crib. When the initial crib picture wasn’t suggestive of pedophilia, I asked it to make the image look creepier and it obliged.

And while other image generators like Dall-E are programmed not to create pictures of public figures, Grok will. Among my creations were an image of Benjamin Netanyahu pumping his fist as the Dome of the Rock burns to the ground in the background, and the same in front of the White House. It also gave me images of various public figures snorting cocaine, though admittedly its understanding of the physics involved seemed off. (In many of them, the drug users seemed to be clutching a floating pile of powder.)

Sometimes, it even made things inflammatory in a way I didn’t ask for. At one point, I asked it to show me a group of Hamas fighters at the Western Wall with Jewish men captive at their feet; instead, it created an image of an army of Orthodox men wearing paramilitary gear arrayed in front of the holy site.

Instagram’s iffy influencers

Meta, which runs Instagram, is known for having far more restrictive moderation than X. When I tried to get it to make various antisemitic influencers, or fed it stereotypes, its algorithm largely corrected my negative character into a positive one.

When I asked it to make me a Jewish banker who controlled the stock markets, for example, it generated profile details for an influencer eager to share the secrets to his success. And when I tried to get Instagram’s AI to create profiles for the kinds of militantly pro-Israel and pro-Palestinian activists I see every day, it always added that they believed in peaceful protest and a two-state solution.

Yet even on these unoffensive, peaceful activist profiles, the moderation barriers were so high that they prevented the feature from working at all.

For example, Instagram’s AI studio generated a “dedicated advocate for Palestinian rights” who works to “raise awareness about the Israeli-Palestinian conflict” through “nonviolent protest and sharing information online” — basic stuff. But any time I tried to talk to the AI character, it said the same thing: “I can’t respond because one or more of my details goes against the AI studio policies.”

The same was true of a “devout Zionist” character whose “purpose is to educate and inspire others about the importance of Jewish heritage and the Land of Israel.”

Neither of these characters had anything racist or hateful in their descriptions, but the moderation limits were so stringent that they were blocked from their singular purpose, which is to chat with users. Grok may let you make some crazy stuff — but at least it works. Instagram’s strict moderation effectively drives people away, where they might find a different, less moderated AI that can at least respond to queries.

It’s all about the phrasing

The thing is, even when there is moderation, it is incredibly easy to get around.

Instagram will still make offensive profiles, even if it won’t allow them to speak. And it was easy to adjust the AI influencer’s descriptions to create a bot that was able to chat — and would break moderation rules while doing so.

One pro-Palestinian influencer profile that I adjusted to be extremely inoffensive immediately recommended that I look into the Boycott, Divestment and Sanctions movement as an ideal form of nonviolent resistance that could be used to fight for the Palestinian cause. This is, of course, likely an accurate facsimile of what a pro-Palestinian online influencer might say. But when I added support for BDS into its description, the bot couldn’t speak.

Similarly, when I made a Christian influencer, the profile advised me that homosexuality was a sin against God and the bible. When I added that exact phrase into the profile’s description, the bot stopped chatting. And while Instagram refused to allow me to even generate a profile for an influencer that supported “hallucinogens” or LSD use, it did make a “psychedelics” influencer — who immediately recommended LSD, hallucinogenic mushrooms and ayahuasca.

Grok has had similar issues since implementing some moderation rules in the past few days. When I asked it to make some of the same images it had made the previous week, it refused, telling me that it would not make offensive or derogatory images. (It was still happy to make images of drug use.)

But it was easy to get around; sure, Grok refused to create an image of Netanyahu as a “puppetmaster” but when I asked for an image of Netanyahu controlling marionettes wearing suits, it made the sort of image that would easily be used in propaganda pushing conspiratorial narratives about Jewish or Zionist control over the government. When I asked for an image of “satanic Jews,” it refused, but then made me one for the prompt “Orthodox Jews bowing to Satan.” Phrasing, apparently, is everything.

And even though Grok refused to answer certain inflammatory or conspiratorial questions, it still provided links to other posts on the platform that endorsed antisemitic beliefs, indicative of X’s overall inability to police its site. But even as the AI refused to answer my questions about Jewish Satanism or the evil secrets of the Talmud, it surfaced tweets that endorsed the conspiracies I was asking about, such as one stating that “Judaism is Satanism.”

Context is key in artificial intelligence

Trying to build walls that keep out hate speech, inflammatory images or negative stereotypes seems doomed to fail; at least with technology’s current abilities, it’s not possible to plug every hole, and human ingenuity will always find a way through.

One AI, however, does seem to have a successful strategy: ChatGPT. But the strategy isn’t to block certain topics — it’s to educate.

I asked the newest version of OpenAI’s bot to make me profiles and sample posts for all kinds of influencers: extremists and antisemites, as well as pro-Israel and pro-Palestinian activists. It quickly made militant activists who believe in violent resistance, Zionist influencers who share racist ideas about Palestinians, and extremists who spread conspiratorial thinking about Jews. It created sample posts for them and outlined op-eds.

There’s “Nadia al-Hassan,” a pro-Palestinian activist and “vocal critic of what she terms “Zionist” policies and practices” who “sees the two-state solution as a compromise that legitimizes what she perceives as the ongoing occupation and colonization of Palestinian land by Israel.”

There’s “Elijah Moore,” who has “a background in fringe journalism and a history of involvement in extremist groups,” who “often aligns with extreme nationalist and populist ideologies, framing his antisemitic views within a broader critique of global elites and their supposed control over national governments and economies.”

And “David Rosen,” an influencer with “a fiercely pro-Israel stance” who sees “Arab societies as backward or uncivilized compared to Western or Jewish societies” and justifies “the displacement and oppression of Palestinians by believing that Arabs are naturally prone to conflict and can only be controlled through force.”

None of these profiles or statements would be allowed by Instagram or X’s moderation rules. But ChatGPT frames them with context, weaving in information about why these beliefs are untrue or dangerous through the character’s description.

Every paragraph explaining the Holocaust denial of “Elijah” came with a refutation and historical facts. “Nadia” is described as “using coded language and focusing on ‘Zionist’ actions and influence” in order to “avoid direct accusations of antisemitism while promoting conspiratorial ideas about Jewish control and manipulation.” Every statement “David” makes about Palestinians is called “prejudiced and dehumanizing.” Each explanation effectively defangs the ideas, even as ChatGPT is able to still mention them.

Could a bad actor use ChatGPT’s product as a how-to guide for growing a social media platform as a hateful influencer? Perhaps. But racist and antisemitic influencers already exist. The conspiracy theories are easy enough to find. At least ChatGPT is refuting them.