Upending Chomsky

Although it’s still too early to say for sure, four Israeli scientists may be at the fore of a new revolution in linguistics — or perhaps more accurately, a counterrevolution.

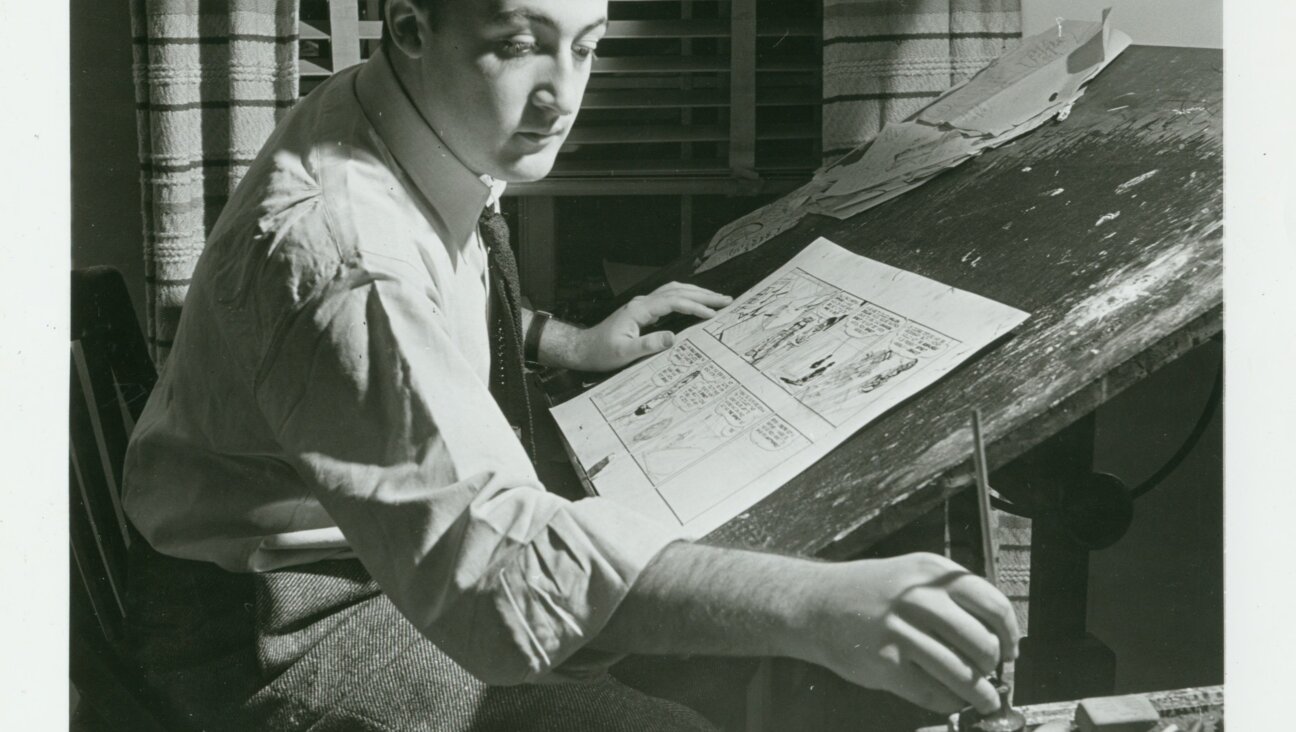

The original revolution goes back to the 1950s and is associated above all with the work of Noam Chomsky, the Massachusetts Institute of Technology linguist whose theories have dominated his field for the last half-century. (Chomsky is also of course renowned, or perhaps one should say notorious, for his extreme anti-American and anti-Israel political views, which his scientific reputation has helped him to promote.) At the time Chomsky began his career, it was widely assumed by linguists that language was an entirely learned behavior like any other, acquired on the part of small children solely on the basis of listening and responding to the speech of adults. After several years of increasingly accurate trial-and-error attempts to emulate this speech, it was believed, their initial baby talk, despite its lesser vocabulary and intellectual complexity, reached an adult level of grammar and syntax.

Chomsky was the first 20th-century linguist to systematically challenge this point of view. In such books as “Aspects of The Theory of Syntax” (1965) and “Language and Mind” (1968), he argued that strictly behavioral theories of language learning were untenable. No child, Chomsky held, can learn to speak in the same way, say, that he learns to swim or ride a bicycle, for the simple reason that, between the ages of 2 and 5, by which time most children are fully competent in their mother tongue, the number of bewilderingly complicated grammatical and syntactical rules that have to be mastered by a still immature mind is too great to be acquired by trial-and-error methods. To go within so short a period, Chomsky argued, from pointing to a lollipop and saying, “That!” to declaring, “You’d better give back that lollipop you took from me yesterday now because if you don’t I’ll tell Mommy on you” involves a pace of development that can only be explained by positing the existence of a grammatical apparatus of some sort that is innate in the brain of every child, even if it is activated only at a certain age.

Most of Chomsky’s long career as a linguist has been devoted to trying to establish just what this innate grammar might be and to demonstrate that it is universal — that is, that it exists at a sufficiently high level of abstraction to account for the grammar of every language in the world. (In other words, it can’t include a rule like “Adjectives always precede the nouns they modify,” because while this is true of some languages, like English, it is untrue of others.) His efforts have led him and his disciples to adopt and discard a large number of theories, known by such technical names as “transformational grammar,” “government-binding theory,” “x-bar theory,” “the principles-and-parameters approach” and “the minimalist program,” each proposing a different set of linguistic rules that are part of the mental structure we are born with.

And yet despite his enormous influence, Chomsky has never been able to prove that any of these theories can satisfactorily account for the grammars of all spoken languages, or even of any single one of them. His main claim to fame still rests largely on his initial contention, subsequently accepted by the great majority of his fellow linguists, that — whatever its precise nature — we must indeed be born with a mental grammar of some sort that enables us to acquire language as quickly as we do.

Now, however, in an article published in the August 8 issue of Proceedings of the National Academy of Sciences and titled “Unsupervised Learning of Natural Languages,” the Israelis Zach Solan, David Horn, Eytan Ruppin and Shimon Edelman, two of them physicists, one a computer scientist and one a psychologist, argue otherwise. They have, the four maintain, developed a mathematical model — or as they put it, “an unsupervised algorithm that discovers hierarchical structure in any sequence of data” — that explains how a child, unaided by an innate mental grammar of any kind, can learn languages as diverse as English and Chinese by precisely the trial-and-error method that Chomsky dismissed as insufficient. Moreover, this model, they write, has actually been tested on computers, which, when fed between 10,000 and 120,000 sentences in languages they originally were unfamiliar with — a not enormous quantity considering the number of sentences spoken to an average 3- or 4-year-old every day — were able to decode these languages’ grammatical structure and to produce grammatically acceptable sentences in them.

I must confess that, having tried reading “Unsupervised Learning of Natural Languages,” I lack the mathematics to follow it very far. Moreover, even if the algorithm in question works, it would still have to be demonstrated, in order to refute Chomsky’s theory of “innate grammar,” that such a model, or one like it, is itself innate in the human mind. But for linguists, the possible implications are enormous. An entire era may be about to come to an end — and with it a good part of the reputation of the man most identified with it. It would be poetic justice indeed if the Israel-bashing Chomsky were knocked off his scientific pedestal by a group of scientists from Israel.

Questions for Philologos can be sent to [email protected].