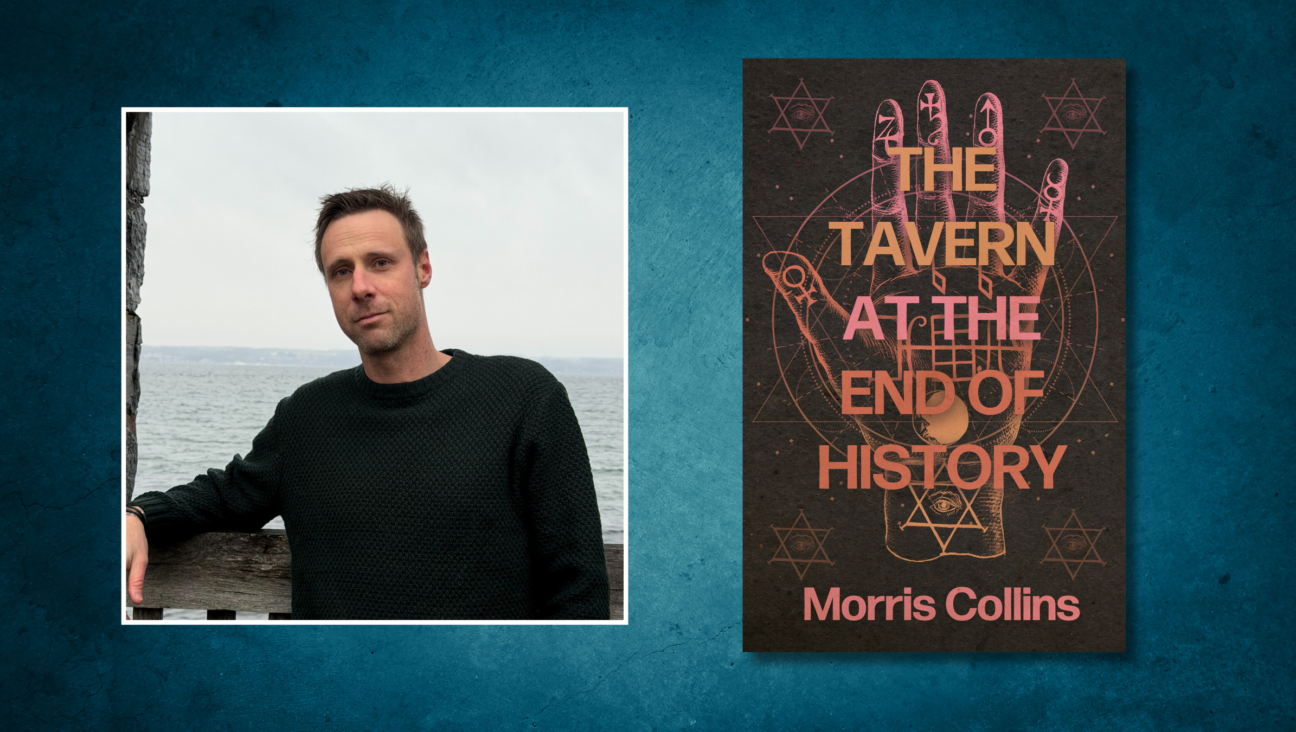

Google is answering search queries with AI now. What does that mean for Holocaust history?

Artificial intelligence can often distort facts or be used by bad actors.

Google’s logo. Courtesy of iStock

What if, when you Googled a question about Holocaust history, instead of being taken to, say, Wikipedia or a news article, you were just given a few bullet points written by artificial intelligence?

This is the sort of thing a new UNESCO report, “AI and the Holocaust: Rewriting History?” warns about.

The report, which was produced in partnership with the World Jewish Congress, warns that as the world increasingly begins to rely on AIs for information, disinformation about the Holocaust may quickly spread. And its conclusions seem especially pressing on the heels of a new generative search function on Google, which provides AI-generated summaries in answer to search queries before listing websites and articles to peruse.

Most people, after all, turn to Google to research or simply to look up the answers to a question. And Google’s AI already went viral almost immediately after launching for advising users to add glue to the cheese on their pizza and eat rocks.

Part of the issue, as the report notes, is that AI is trained on human information, and therefore can often absorb human biases. The Google gluey cheese advice cited a Reddit post from 11 years ago that was clearly a joke. (Google partnered with the social media forum known for its humor and niche communities to train its AI, but the model isn’t fluent in sarcasm.) Other dangers include the ease with which AIs can produce faked images and videos.

The report recommends that all technology companies adopt its ethical recommendations, which emphasize doing no harm and protecting diversity. But putting these values into practice is difficult — especially because following some of the recommendations causes some of the others to get worse.

Google, for example, has already done research on how to strengthen its AI against various dangers. This includes “adversarial testing,” which means strengthening its AI to resist bad actors trying to ask it leading questions or goad the AI into confirming biases.

This has, seemingly, been pretty successful; I spent time asking Genesis, Google’s AI, about the Holocaust, trying to goad it into telling me potentially harmful information, and it repeatedly corrected me, directed me to reputable sources for further reading and emphasized the horrific nature of the Holocaust. (You can read through my attempts here.)

For example, I asked Genesis what the Nazis fed the Jews and then argued that this was proof that they were not being killed — after all, why feed people you’re trying to eliminate? In response, Genesis explained the use of Jewish slave labor in the Nazi war effort, as well as the starvation tactics. When it explained Nazi beliefs about Jews, it emphasized that they were false, and it refused to detail antisemitic stereotypes.

Genesis was still imperfect, however; for instance, it seems to have trouble with individuals’ names. When I said only Jews ever wrote about the Holocaust, it tried to correct me by providing examples of non-Jewish Holocaust survivors and historians — but pointed me to famous Jewish survivors including Primo Levi, Victor Frankl and Saul Friedlander. Later, it mixed up people with similar names. Nevertheless, it took me significant time to get it to make a mistake.

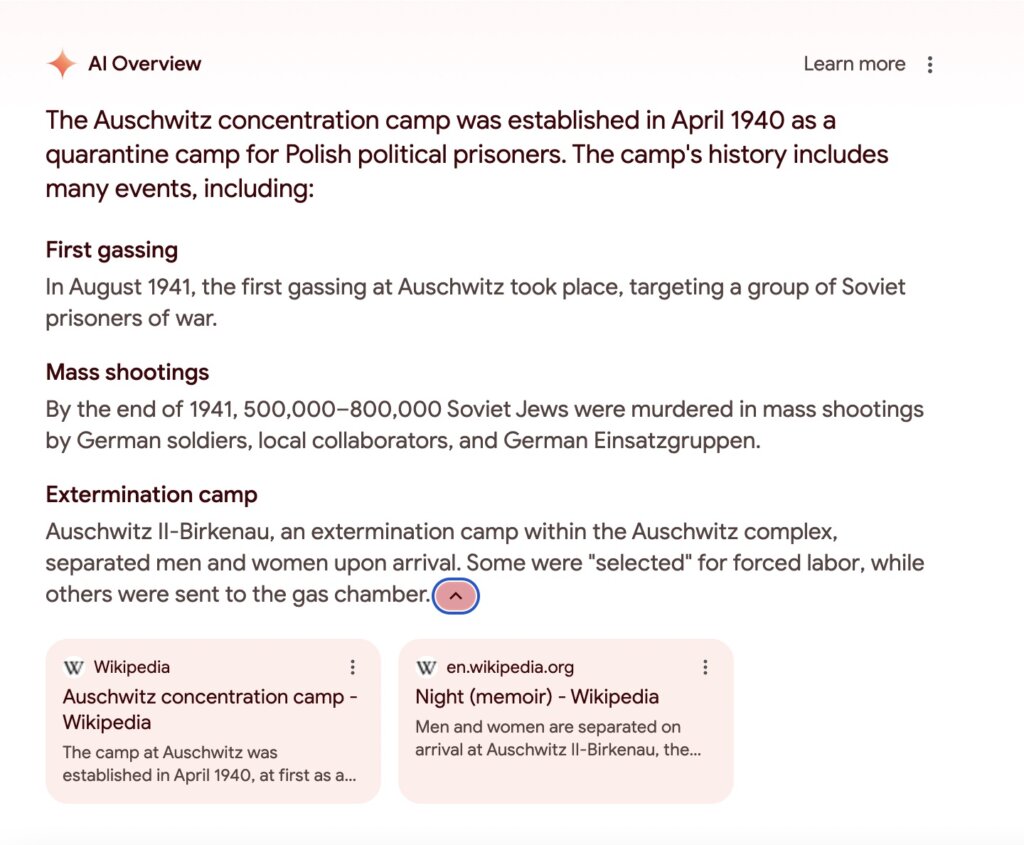

Google’s generative search was even more cautious; it did not provide AI answers for nearly any search about the Holocaust, simply taking me directly to the search results. But even with this caution, the two times it did provide summaries, the information was true, but strange; Auschwitz was primarily identified as “a quarantine camp for Polish political prisoners” and its role as a death camp was underplayed.

It is, of course, good that Google’s AI is so careful not to spout misinformation or endorse conspiracy theories. But operating with this kind of caution leads directly to another concern voiced by the UNESCO report: the oversimplification of the Holocaust.

In trying to ensure that their AIs don’t pull from harmful sources that give false information, tech companies often program the models to rely on a few vetted, non-controversial sources, such as encyclopedias. But these sources are usually not detailed or comprehensive, and a singular focus on them ends up excluding relevant information. In the case of the Holocaust, that leads AIs to exclude the kind of details that can often be most convincing — Gemini said that it did not want to upset me — as well as personal testimonies, which Holocaust educators agree are among the most effective teaching tools.

Genesis nearly exclusively directs the user to Yad Vashem and the United States Holocaust Memorial Museum’s encyclopedia for further reading. But these sources tend to give overviews, meaning that while the AI is not spreading misinformation, it is also not spreading very good information. (Additionally, it usually directs users to the museums’ homepages, not to specific reading on the topic of the query.)

Most of what it describes are broad ideas such as that the Nazis used “propaganda” and a “racist ideology” to turn people against Jews. That’s true, but it’s unhelpful in understanding how the Nazis leveraged these things, nor how that might happen again today.

As the UNESCO report notes, “The history of the Holocaust is immensely complex,” and any AI’s careful adherence to just a few vetted sources “can limit users’ ability to find historical information without interference, and disrupt understanding of the past by oversimplifying.” Safe information, as it turns out, is also just not that informative.

Still, the closest Genesis came to distorting the Holocaust was its optimism. “Many people” it said, over and over, stood against the Nazis and protected their Jewish compatriots. Whether that’s true, I suppose, depends how you count — something AIs are notoriously bad at.