Meta inappropriately removed content related to Israel-Hamas war, oversight board concludes

Automated moderation tools removed posts that should have been allowed, according to the ruling

An employee at Facebook headquarters in Palo Alto, California. Photo by Getty Images

(JTA) — Automated content moderation tools deployed amid “a surge in violent and graphic content” on Facebook and Instagram after Oct. 7 went too far in removing posts that social media users should have been able to see, an independent oversight panel at their parent company, Meta, ruled on Tuesday.

The finding came in a review of two cases in which human moderators had restored content that computer moderation had removed. One was about a Facebook video appearing to show a Hamas terrorist kidnapping a woman on Oct. 7. The other was an Instagram video appearing to show the aftermath of a strike near Al-Shifa hospital in Gaza.

The cases were the first taken up by the Meta’s Oversight Board under a new expedited process meant to allow for speedier responses to pressing issues.

In both cases, the posts were removed because Meta had lowered the bar for when its computer programs would automatically flag content relating to Israel and Gaza as violating the company’s policies on violent and graphic content, hate speech, violence and incitement, and bullying and harassment.

“This meant that Meta used its automated tools more aggressively to remove content that might violate its policies,” the board said in its decision. “While this reduced the likelihood that Meta would fail to remove violating content that might otherwise evade detection or where capacity for human review was limited, it also increased the likelihood of Meta mistakenly removing non-violating content related to the conflict.”

As of last week, the board wrote, the company had still not raised the “confidence thresholds” back to pre-Oct. 7 levels, meaning that the risk of inappropriate content removal remains higher than before the attack.

The oversight board is urging Meta — as it has done in multiple previous cases — to refine its systems to safeguard against algorithms incorrectly removing posts meant to educate about or counter extremism. The accidental removal of educational and informational content has plagued the company for years, spiking, for example, when Meta banned Holocaust denial in 2020.

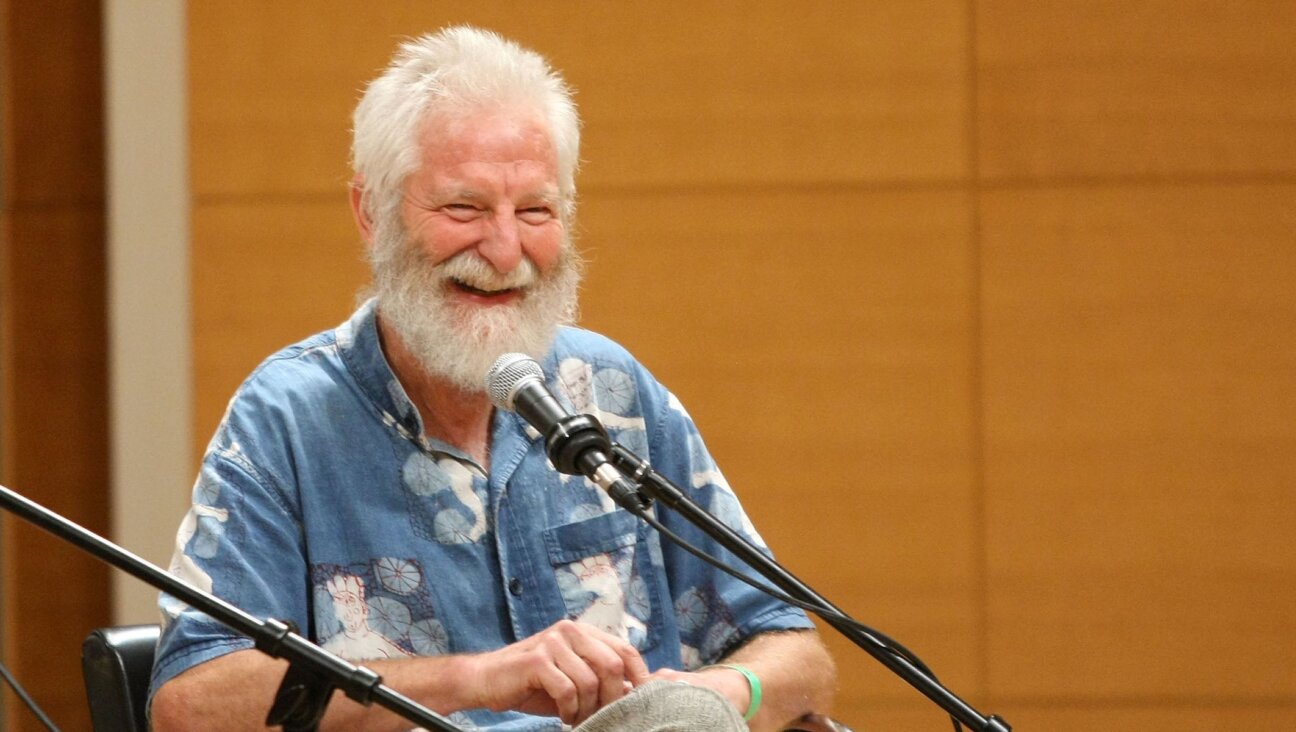

“These decisions were very difficult to make and required long and complex discussions within the Oversight Board,” Michael McConnell, a board chair, said in a statement. “The board focused on protecting the right to the freedom of expression of people on all sides about these horrific events, while ensuring that none of the testimonies incited violence or hatred. These testimonies are important not just for the speakers, but for users around the world who are seeking timely and diverse information about ground-breaking events, some of which could be important evidence of potential grave violations of international human rights and humanitarian law.”

Meta is not the only social media company to face scrutiny over its handling of content related to the Israel-Hamas war. TikTok has drawn criticism over the prevalence of pro-Palestinian content on the popular video platform. And on Tuesday, the European Union announced a formal investigation into X, the platform formerly known as Twitter, using new regulatory powers awarded last year and following an initial inquiry into spiking “terrorist and violent content and hate speech” after Oct. 7.

This article originally appeared on JTA.org.