Here’s one big reason why online antisemitism and extremism are so hard to stop

TikTok’s logo. By Getty Image

TikTok is no stranger to antisemitic trends, from teens pretending to be Holocaust victims arriving at the gates of heaven to videos of face-distorting filters set to “Hava Nagila.” Some comments on posts by or about Jews make light of the Holocaust or say that Hitler “missed one.”

But antisemitism is only one form of extremism on TikTok. A new study from the Institute for Strategic Dialogue (ISD), a think tank dedicated to analyzing and responding to extremism, found content originally produced by ISIS, videos from the 2019 Christchurch mosque shooting and many references to white supremacy.

TikTok’s policies clearly state that extremist content is forbidden on its platform. Yet in a 16 day period, the ISD analysts identified over 1,000 videos with extremist content.

All social media platforms struggle with policing extremism. But Ciaran O’Connor, the author of the ISD study, said that TikTok’s unique interface is harder to police than other platforms thanks to the app’s suite of editing tools that allow for more creative content creation.

In a phone call, O’Connor said that symbols such as the swastika or Sonnenrad are “the bread and butter” of extremist content. But TikTok’s tools and format allows creators to use nuance and subtle references to get their message across, and sometimes ride the algorithm to reach thousands of new eyes.

One video, for example, used a cartoon of workers at a pizzeria talking about the impossibility of making six million pizzas in four ovens, a Holocaust denial reference. A popular meme known as “Confused John Travolta” was coopted for Holocaust denial as well, riding the trend to tens of thousands of views.

Wide gaps in policing

While TikTok has strong policies against racist or extremist content, some essential terms, such as “extremism,” are not well-defined. The study also concludes that those policies are poorly enforced; hate speech forbidden in TikTok’s Community Guidelines can remain on the platform without being caught.

Narrowly defined bans also allow extremist creators to easily circumvent the rules; for example, searches for “Hitler” are blocked, but “H1tler” works.

The ISD study also found that private videos and profiles easily escaped bans. Some extremist profiles went private after attracting widespread attention, reverting to a public profile once attention had died down. Profiles with white supremacist usernames are often private, but still show up in search results.

The challenges of a creative platform

Visual media or creative content is inherently very hard to police as it is difficult to index and search on a large scale. Videos and images are “a challenge for every single social media platform, not just TikTok. It’s already the next battleground for a lot of this stuff,” said O’Connor.

O’Connor explained that many platforms are members of the Global Internet Forum to Counter Terror (GIFCT), which helps companies work together to police their content, including a database of visual material that enables faster identification and removal of content.

O’Connor said that TikTok tried to join GIFCT several years ago, but have not yet been approved; the company’s membership is still pending.

However, even with GIFCT support, the type of visual and creative content found on TikTok is still tricky to police because elements can have multiple meanings and videos use a nuanced combination of visuals, sounds and memes to make their point.

For example, the song “Hava Nagila” is used positively by Jewish creators or others celebrating Jewish life and culture, but the ISD study also found it was commonly used in antisemitic videos to ridicule Jews.

“It’s hard for AI and AI-informed systems to catch that,” said O’Connor.

A few viral posts go a long way

Users interact with TikTok primarily through a personalized “for you page,” which features algorithmically chosen relevant content that varies from person to person. Videos placed on users’ “for you” pages are far more likely to go viral, gaining hundreds of thousands or even millions of views and likes over a few hours or days.

TikTok is famously cagey about its algorithm, and does not facilitate third-party research on a large scale. This means that the study had to be conducted manually, with researchers individually searching for and identifying content within the app without going through the algorithm, actively seeking extremist content instead of scrolling like the average user.

The ISD found extremist videos through a mix of keyword searches and what O’Connor called “the snowball effect,” in which analysts traced creators’ follower lists and comments to find other extremist creators.

Many of the videos identified by the ISD analysts only had a few hundred views, meaning they did not reach a large audience and likely did not make it onto users’ “for you” pages. However, some did, including an anti-Asian video with two million views, and a video game recreation of Auschwitz video, which had 1.8 million views; just a single extremist TikTok getting millions of views has a wide impact.

“The algorithmic systems underlying TikTok’s product are evidently helping to promote and amplify this content to audiences that might not otherwise have found it,” concluded the study.

Before the research was released, the ISD shared its findings with TikTok, which has since removed many of the videos and accounts identified in the research; TikTok has also removed videos identified by the Forward. But O’Connor said that this is insufficient; TikTok needs a more comprehensive enforcement tool that prevents videos from being discovered in the first place.

“I think we can all agree that it’s not up to third party researchers to catch Christchurch or ISIS content,” he said.

The Forward is free to read, but it isn’t free to produce

I hope you appreciated this article. Before you go, I’d like to ask you to please support the Forward.

Now more than ever, American Jews need independent news they can trust, with reporting driven by truth, not ideology. We serve you, not any ideological agenda.

At a time when other newsrooms are closing or cutting back, the Forward has removed its paywall and invested additional resources to report on the ground from Israel and around the U.S. on the impact of the war, rising antisemitism and polarized discourse.

This is a great time to support independent Jewish journalism you rely on. Make a gift today!

— Rachel Fishman Feddersen, Publisher and CEO

Support our mission to tell the Jewish story fully and fairly.

Most Popular

- 1

Fast Forward Ye debuts ‘Heil Hitler’ music video that includes a sample of a Hitler speech

- 2

Opinion It looks like Israel totally underestimated Trump

- 3

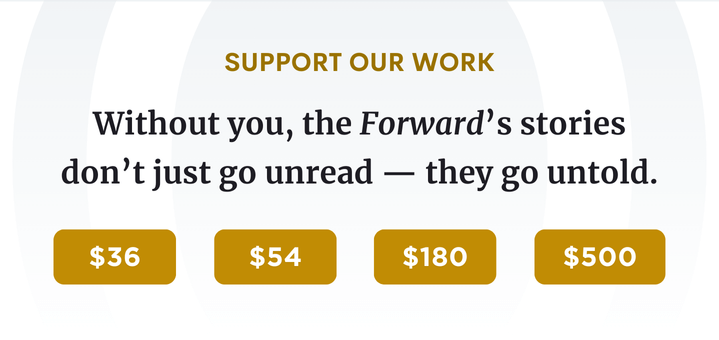

Culture Cardinals are Catholic, not Jewish — so why do they all wear yarmulkes?

- 4

Fast Forward Student suspended for ‘F— the Jews’ video defends himself on antisemitic podcast

In Case You Missed It

-

Culture How one Jewish woman fought the Nazis — and helped found a new Italian republic

-

Opinion It looks like Israel totally underestimated Trump

-

Fast Forward Betar ‘almost exclusively triggered’ former student’s detention, judge says

-

Fast Forward ‘Honey, he’s had enough of you’: Trump’s Middle East moves increasingly appear to sideline Israel

-

Shop the Forward Store

100% of profits support our journalism

Republish This Story

Please read before republishing

We’re happy to make this story available to republish for free, unless it originated with JTA, Haaretz or another publication (as indicated on the article) and as long as you follow our guidelines.

You must comply with the following:

- Credit the Forward

- Retain our pixel

- Preserve our canonical link in Google search

- Add a noindex tag in Google search

See our full guidelines for more information, and this guide for detail about canonical URLs.

To republish, copy the HTML by clicking on the yellow button to the right; it includes our tracking pixel, all paragraph styles and hyperlinks, the author byline and credit to the Forward. It does not include images; to avoid copyright violations, you must add them manually, following our guidelines. Please email us at [email protected], subject line “republish,” with any questions or to let us know what stories you’re picking up.